MTE Relay Server on AWS

Introduction

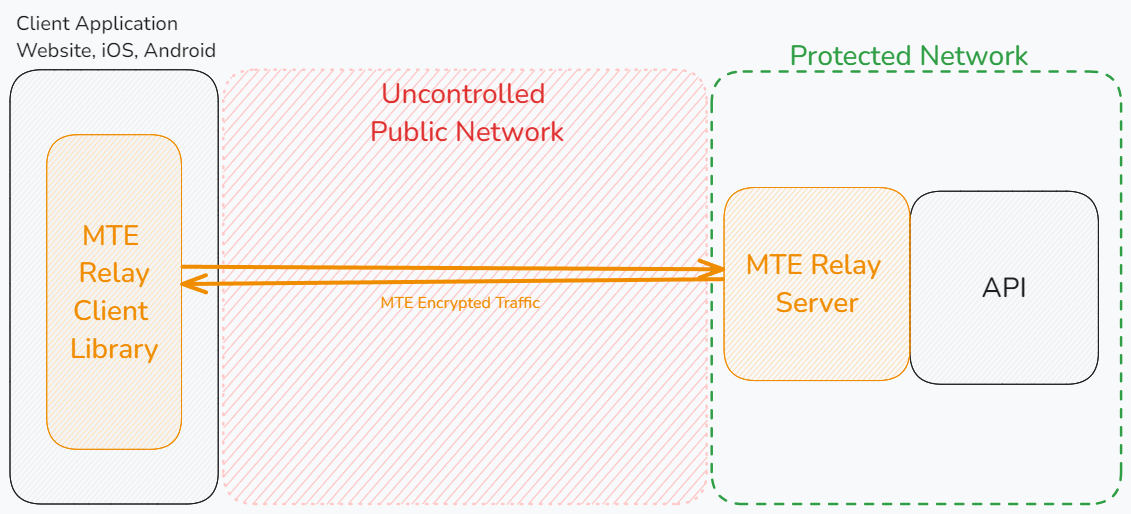

MTE Relay Server is an end-to-end encryption system that protects all network requests with next-generation application data security. It acts as a proxy server in front of your backend, communicating with an MTE Relay Client to encode and decode all network traffic. The server is highly customizable and supports integration with other services through custom adapters.

Below is a typical architecture where a client application communicates with an MTE Relay Server, which then proxies decoded traffic to backend services:

MTE Relay Servers can only communicate with MTE Relay Clients. An MTE API Relay cannot communicate with an MTE Relay Server or an MTE Relay Client SDK. MTE Relay Servers are strictly for client-to-server communications.

Prerequisites

Technical Requirements

- A web or mobile application project that communicates with a backend API over HTTP.

- Our demo app is available on GitHub: MTE Relay Demos.

Skills and Knowledge

- Familiarity with ECS and/or EKS.

- Experience with the AWS CLI.

Subscribing to MTE Relay Server on AWS Marketplace

- Navigate to the MTE Relay Server AWS Marketplace page.

- Scroll to the bottom and click the yellow "Subscribe" button.

- Select the setup type as ECS or EKS based on your preference.

- Scroll down and look for the Deployment Templates section on the right side of the screen. Click the deployment template you want to use.

- Follow the instructions in the template's README to deploy MTE Relay Server to your AWS account.

Deployment Options

MTE Relay Server is provided as a Docker image and can be deployed on AWS ECS, AWS EKS, or manually using another container runtime.

1. Elastic Container Service (ECS)

Deployment Templates

Two CloudFormation templates are available:

- Production template: Multiple instances, load balancing, and SSL configuration.

- Demo template: Lightweight, development-oriented deployment.

Requirements

- Git

- AWS CLI

- AWS permissions to launch resources

Deployment Steps

- Clone the GitHub repository:

AWS CloudFormation Templates - Choose between the demo or production template.

- Modify the

parameters.jsonfile with your configuration. - Navigate to the respective template directory (demo or production).

- Run the

deploy.sh createcommand to deploy, or the delete command to remove resources.

Video Guide

2. Elastic Kubernetes Service (EKS)

If kubectl is already configured for your EKS cluster:

Example Deployment File (deployment.yaml)

apiVersion: apps/v1

kind: Deployment

metadata:

name: aws-mte-relay-deployment

spec:

replicas: 1

selector:

matchLabels:

app: aws-mte-relay

template:

metadata:

labels:

app: aws-mte-relay

spec:

imagePullSecrets:

- name: awscrcreds

containers:

- name: aws-mte-relay

image: 709825985650.dkr.ecr.us-east-1.amazonaws.com/eclypses/mte-relay-server:4.5.0

ports:

- containerPort: 8080

env:

- name: DOMAIN_MAP

value: '{ "relay.example.com": { "upstream": "<UPSTREAM_VALUE_HERE>", "client_id_secret": "<YOUR_CLIENT_ID_SECRET_HERE>", "cors_origins": ["<YOUR_CORS_ORIGINS_HERE>"] } }' # Update this value!

- name: AWS_REGION

value: <AWS REGION HERE>

---

apiVersion: v1

kind: Service

metadata:

name: aws-mte-relay-service

spec:

type: LoadBalancer

selector:

app: aws-mte-relay

ports:

- protocol: TCP

port: 80

targetPort: 8080

Commands

kubectl apply -f deployment.yaml

kubectl get all

kubectl delete -f deployment.yaml

3. Docker Image

You can also run the image using Docker, Podman, K3s, or Docker Swarm.

Commands

- bash

- PowerShell

aws ecr get-login-password \

--region us-east-1 \

| docker login --username AWS \

--password-stdin 709825985650.dkr.ecr.us-east-1.amazonaws.com

docker pull 709825985650.dkr.ecr.us-east-1.amazonaws.com/eclypses/mte-relay-server:4.5.0

aws ecr get-login-password `

--region us-east-1 `

| docker login --username AWS `

--password-stdin 709825985650.dkr.ecr.us-east-1.amazonaws.com

docker pull 709825985650.dkr.ecr.us-east-1.amazonaws.com/eclypses/mte-relay-server:4.5.0

Refer to the Server Configuration section for required environment variables.

Server Configuration

MTE Relay Server is configured using environment variables.

Using a DOMAIN_MAP (Recommended)

DOMAIN_MAP is a JSON object keyed by the Host header that arrives with each request.

The value for each key is a settings object that tells the proxy how to process the request.

Settings

| Field | Type | Purpose |

|---|---|---|

| upstream | string | Full URL (http://localhost:8080, https://api.internal) |

| pass_through_routes | string[] | Paths that use standard HTTP proxy, without MTE encryption |

| client_id_secret | string | Legacy shared secret used by some auth layers |

| cors_origins | string[] | List of allowed CORS origins for preflight requests |

| cors_methods | string[] | List of allowed CORS methods for preflight requests |

| headers | string | A JSON object of additional headers to add to proxied requests |

Examples

- Single Proxy: Requests with Host header

mte-api.company.comare decrypted and forwarded tohttp://internal-service. The/healthroute is proxied without MTE encoding/decoding.

{

"mte-api.company.com": {

"upstream": "http://internal-service",

"client_id_secret": "8KeJmtuKweUhymNJmGHvGMrJCUtHxhQG",

"pass_through_routes": ["/health"],

"cors_origins": ["https://app.company.com"],

}

}

- Multi-service proxy that handles:

- Incoming encoded requests for

billing.company.io, forwarding tohttp://billing-servicewith/readyand/liveroutes unencoded. - Request to

auth.company.io, forwarding tohttp://auth-servicewith/healthroute unencoded. - All other requests (any Host) are proxied to

http://default-backend:8080without encoding.

{

"billing.company.io": {

"upstream": "http://billing-service",

"pass_through_routes": ["/ready", "/live"],

"client_id_secret": "8KeJmtuKweUhymNJmGHvGMrJCUtHxhQG",

"cors_origins": ["https://app.company.com"],

},

"auth.company.io": {

"upstream": "http://auth-service:3000",

"pass_through_routes": ["/health"],

"client_id_secret": "heYBRbTr3QNBgQFV6x6YpfWqyEs2Tj8F",

"cors_origins": ["https://app.company.com"],

"cors_methods": ["GET", "POST", "OPTIONS"]

},

"*": {

"upstream": "http://default-backend:8080",

"client_id_secret": "PmNPtWJMzz46S9k8cY7du5Z6XYc9B5Ad",

"cors_origins": ["https://app.company.com", "http://localhost:3000"],

}

}

Export as one-line env var:

export DOMAIN_MAP='{ "billing.company.io": { "upstream": "http://billing-service", "pass_through_routes": ["/ready", "/live"], "client_id_secret": "8KeJmtuKweUhymNJmGHvGMrJCUtHxhQG" }, "auth.company.io": { "upstream": "http://auth-service", "pass_through_routes": ["/health"], "client_id_secret": "heYBRbTr3QNBgQFV6x6YpfWqyEs2Tj8F" }, "*": { "upstream": "http://default-backend:8080", "client_id_secret": "PmNPtWJMzz46S9k8cY7du5Z6XYc9B5Ad" } }'

Host header derivation

The Host header is taken directly from the authority component of the absolute URL used in the request.

For example, https://api.example.com/users/1 the authority is api.example.com (port 443 is implicit for HTTPS), so the Host header sent by the client is exactly:

Host: api.example.com

If the URL contains an explicit port (https://api.example.com:8443/users/1) the header becomes:

Host: api.example.com:8443

DOMAIN_MAP must therefore use the exact same string—domain only, or domain:port—to match the incoming Host header. Or, use a wildcard "*" to match any host.

Host Matching

MTE Relay Server performs an exact match against the Host header (case-insensitive).

If an exact match is not found, it checks for a wildcard ("*").

If no match is found, the request is rejected with 404.

Using individual Environment Variables (Legacy)

Using these environment variables will result in the MTE Relay Server being configured to only handle a single domain. This method is deprecated in favor of using the DOMAIN_MAP variable. If both are provided, DOMAIN_MAP will take precedence.

Example:

UPSTREAM- Upstream API or service URL.CLIENT_ID_SECRET- Secret for signing client IDs (minimum 32 characters).PASS_THROUGH_ROUTES- Comma-separated list of routes proxied without encoding/decoding.CORS_ORIGINS- Comma-separated list of allowed origins.CORS_METHODS- Comma-separated list of allowed methods. Default:GET, POST, PUT, DELETE.

UPSTREAM='https://api.my-company.com'

CLIENT_ID_SECRET='2DkV4DDabehO8cifDktdF9elKJL0CKrk'

PASS_THROUGH_ROUTES='/health,/version'

OUTBOUND_TOKEN='s3cr3tT0k3nV4lu3'

Additional Environment Variables

PORT- Default:8080.LOG_LEVEL- One of trace, debug, info, warning, error, panic, disabled. Default:info.

Client-Side Setup

Eclypses provides client-side SDKs to integrate with MTE Relay Server:

Client-side integration typically requires less than one day of effort.

Testing & Health Checks

- Monitor container logs for startup messages

- Use the default or custom echo routes to test container responsiveness:

- Default:

/api/mte-echo - Custom Message:

/api/mte-echo?msg=test

- Default:

Expected response:

{

"message": "test",

"timestamp": "<timestamp>"

}

Monitoring

Amazon Managed Grafana

- Create a Grafana workspace in AWS.

- Add CloudWatch as a data source (IAM role auth recommended).

- Import the provided dashboard:

Dashboard Metrics

- Requests Processed [req/sec]

- Request Time [ms]

- Upstream Proxy Time [ms]

- Average Decode Time [ms]

- Average Encode Time [ms]

Performance Metrics

Performance was measured with ~1 kb request/response payloads on a t2.micro (1 vCPU, 1 GB RAM):

| Concurrency | Req/Sec Relay | Req/Sec API | Relay % | Extra Latency (Median) |

|---|---|---|---|---|

| 400 | 185 | 188 | 98.5% | +10 ms |

| 500 | 298 | 305 | 97.8% | +17 ms |

| 550 | 338 | 355 | 95.1% | +45 ms |

| 600 | 339 | 402 | 84.3% | +202 ms |

Note: At higher volumes (≥550 concurrent), scaling across multiple Relay instances is recommended.

Troubleshooting

- Invalid Configuration

- Check logs for missing/invalid environment variables.

- Relay unreachable

- Verify Security Groups and load balancer settings.

- Redis connection issues

- Ensure Redis is in the same VPC and credentials are correct.

Enable debug logs by setting the environment variable LOG_LEVEL=debug.

Security

- No sensitive data is stored in the container.

- No root privileges required.

Costs

The service uses a usage-based cost per instance per hour.

Associated AWS services include:

| AWS Service | Purpose |

|---|---|

| ECS | Container orchestration |

| ElastiCache (Redis) | State/session management |

| CloudWatch | Logging and monitoring |

| VPC | Networking isolation |

| Elastic Load Balancer | Scaling across Relay containers |

Maintenance

Routine Updates

- Updated container images are distributed through the AWS Marketplace.

Fault Recovery

- Relaunch the Relay container task; clients will automatically re-pair.

Service Limits

- ECS: ECS Service Quotas

- CloudWatch: CloudWatch Limits

- ELB: Load Balancer Limits

Supported Regions

MTE Relay Server is supported in most AWS regions, except:

- GovCloud

- Middle East (Bahrain, UAE)

- China

Support

For assistance, contact Eclypses Support:

📧 customer_support@eclypses.com

🕒 Monday–Friday, 8:00 AM–5:00 PM MST (excluding holidays)