MTE API Relay

Introduction:

The MTE API Relay Container is a NodeJS application that encodes and proxies HTTP payloads to another MTE API Relay Container, which decodes and proxies the request to another API Service. The Eclypses MTE is a compiled software library combining quantum-resistant algorithms with proprietary, patented techniques to encode data. MTE API Relay uses FIPS 140-3 validated, quantum-resistant data encryption technology to protect data before it is sent across an uncontrolled network.

Customer Deployment

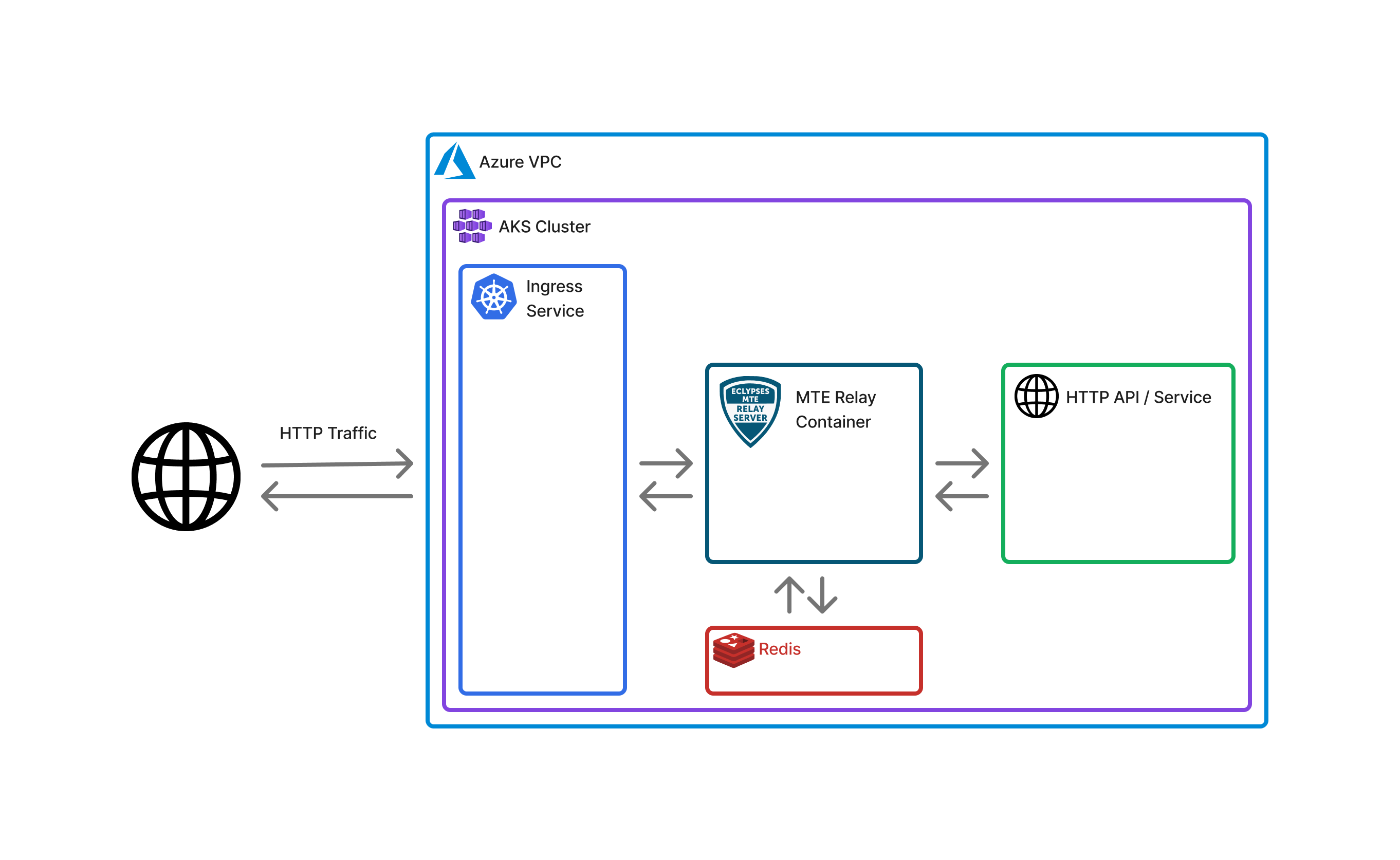

The MTE API Relay Container is used to encode HTTP Requests between two server applications. In Azure, MTE API Relay is provided as a Kubernetes extension that can be installed in an existing Kubernetes cluster or your may select to create a new cluster to run it in.

Click here to view MTE API Relay in the Azure Marketplace!

Typical Customer Deployment

In an ideal situation, a customer will already have (or plan to create):

- A server application that sends HTTP requests to another server or service.

- Access to both environments to stand up a new service, MTE API Relay, ideally using Kubernetes and AKS.

Prerequisites and Requirements

Technical

The following elements are previously required for a successful deployment:

- An application (or planned application) that communicates with a Web API using HTTP Requests

Skills or Specialized Knowledge

- Familiarity with Azure AKS

Configuration Settings

When you subscribe to the product via the Azure Marketplace, you will be asked to complete the following configuration options.

UPSTREAM- Required

- The upstream application IP address, ingress, or URL that inbound requests will be proxied to. Although this should point to the partner http application it is being launched with.

CLIENT_ID_SECRET- Required

- A secret that will be used to sign identification headers when communicating with a client. We recommend a 32+ character, randomly generated string.

OUTBOUND_TOKEN- Required

- This token is expected to be passed as a header with the request that is meant to be encrypted and sent out. We recommend a 32+ character, randomly generated string.

Service Type- Required

- The type of the Kubernetes service. A "LoadBalancer" will generated a public facing IP address. A "ClusterIP" will only generate an IP that is available within your AKS cluster.

SECRET- A secret that will be required to be know in order to exchange encrypted messages to/from this server. MEssages sent/received from a server with an incorrect secret will fail to decode.

Architecture Diagrams

Default Deployment

AKS Deployment

AKS Setup

Deploying MTE Relay on AKS is very simple, assuming you have already configured your kubectl to connect to your AKS cluster.

Deployment File:

The deployment file below is similar to what will get deployed when you launch the MTE API Relay from the Azure Marketplace. Edit and apply with care.

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: azure-mte-api-relay-deployment

spec:

replicas: 1

selector:

matchLabels:

app: azure-mte-api-relay

template:

metadata:

labels:

app: azure-mte-api-relay

spec:

containers:

- name: azure-mte-api-relay

image: <CONTAINER_IMG>

ports:

- containerPort: 8080

env:

- name: CLIENT_ID_SECRET

value: <YOUR CLIENT ID SECRET HERE>

- name: UPSTREAM

value: <UPSTREAM VALUE HERE>

- name: OUTBOUND_TOKEN

value: <OUTBOUND_TOKEN_HERE>

- name: SECRET

value: <SERVER_SECRET_HERE>

---

# Service definition

apiVersion: v1

kind: Service

metadata:

name: azure-mte-api-relay-service

labels:

name: azure-mte-api-relay-service

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 8080

selector:

app: azure-mte-api-relay

To release the deployment, run the command:

kubectl apply -f deployment.yaml

To get information about the newly created resources, run the command:

kubectl get all --all-namespaces

Remove deployment and service

If you need to remove the deployment and service you may run the command:

kubectl delete -f deployment.yaml

Usage Guide

How does MTE API Relay protect network traffic?

The first Relay server will take in this request and validate the x-mte-outbound-token header exists and is valid. It will then attempt to establish and MTE Relay connection with the Relay Server specified in the x-mte-upstream header. If that is successful, the request data will be encrypted and sent to the upstream server. The upstream server we decrypt the request and proxy the data to it's API server.

The reverse happens for the response, which allows the request and response to be encrypted while traversing public networks. Finally, the first Relay Server will catch the encrypted response, decrypt the data, and respond to it's API with the decrypted data.

Minimum Requirements

To use the MTE API Relay to protect HTTP traffic between two server, you need to:

- Send the initial request to the out-bound Relay Server

- Include the required headers

x-mte-outbound-tokenThis header should contain the value of theOUTBOUND_TOKENenvironment variable. Only requests that include this token will be allowed to make outbound requests.x-mte-upstreamThe destination of this request once it has been encoded. It must be an MTE API Relay, so that the request can be decoded.

An example curl will look like this:

curl --location 'http://<RELAY_SERVER_1>/api/login' \

--header 'x-mte-outbound-token: 12098312098123098120398' \

--header 'x-mte-upstream: http://<RELAY_SERVER_2>' \

--header 'Content-Type: application/json' \

--header 'Cookie: Cookie_1=value' \

--data-raw '{

"email": "jim.halpert@example.com",

"password": "P@ssw0rd!"

}'

Additional Request Options

These additional options may be included as header in order to change how the request is encrypted.

x-mte-encode-typeValue can beMTEorMKE. Default isMKE.x-mte-encode-headersCan be"true"or"false", default is"true".x-mte-encode-urlCan be"true"or"false", default is"true".

Example Curl:

curl --location 'http://<RELAY_SERVER_1>/api/login' \

--header 'x-mte-outbound-token: 12098312098123098120398' \

--header 'x-mte-upstream: http://<RELAY_SERVER_2>' \

--header 'x-mte-encode-type: MTE' \

--header 'x-mte-encode-headers: false' \

--header 'x-mte-encode-url: false' \

--header 'Content-Type: application/json' \

--data-raw '{

"email": "jim.qweqwe@example.com",

"password": "P@ssw0rd!"

}'

Testing

Once the API Relay Server is configured:

- To test that the API Service is active and running, submit an HTTPGet request to the echo route:

- curl 'https://[your_domain]/api/mte-echo'

- Successful response:

{

"echo": "test",

"time": [time_stamp]

}

Monitoring

Azure Managed Grafana is the recommended way to monitor application health.

Grafana Setup

-

Enable Azure Monitor

- Navigate to the Azure portal and sign in with your Azure account.

- In the left-hand menu, select "Monitor" to open the Azure Monitor service.

- Click on "Metrics" under the "Insights" section.

- Select the appropriate subscription and resource group where your AKS cluster is deployed.

- Choose your AKS cluster from the list of resources.

- Click on "Add metric" to start monitoring specific metrics for your AKS cluster.

- Select the metrics you want to monitor, such as CPU usage, memory usage, and network traffic.

- Configure alerts by clicking on "Alerts" in the Monitor menu and setting up alert rules based on the metrics you are monitoring.

- Optionally, integrate Azure Monitor with Grafana for advanced visualization by following the Azure Managed Grafana setup instructions.

- For more detailed instructions, refer to the Azure Monitor documentation.

-

Enable Managed Grafana

- Navigate to the Azure portal and sign in with your Azure account.

- In the left-hand menu, select "Create a resource."

- Search for "Azure Managed Grafana" and select it from the list.

- Click "Create" to start the setup process.

- Fill in the required details, such as subscription, resource group, and instance details.

- Click "Review + create" and then "Create" to deploy the Grafana instance.

- Once the deployment is complete, navigate to the resource and click on the "Endpoint" URL to access Grafana.

- Configure data sources and dashboards as needed to monitor your AKS cluster and other resources.

- For more detailed instructions, refer to the Azure Managed Grafana documentation.

Download Grafana Dashboard

To download the Grafana dashboard configuration file, click the link below:

Download Grafana DashboardTroubleshooting

Most problems can be determined by consulting the logs. Some common problems that might occur are:

- Invalid Configuration

- Network misconfiguration

Some specific error examples include:

- I cannot reach my API Relay Container.

- Double check your network settings.

- Check Logs

- Server exits with a

ZodError- This is a config validation error. Look at the "path" property to determine which of the required Environment Variables you did not set. For example, if the path property shows "upstream," then you forgot to set the environment variable "UPSTREAM."

- Server cannot reach Redis.

- Check that Redis is started in same VPC.

- If using credentials, check that credentials are correct.

MTE API Relay Server includes a Debug flag, which you can enable by setting the environment variable "DEBUG" to true. This will enable additional logging that you can review in CloudWatch to determine the source of any issues.

Health Check

For short and long-term health, monitor logs regularly.

Echo Route

The Echo route can be called by an automated system to determine if the service is still running. To test that the API Service is active and running, submit an HTTP Get request to the echo route:

curl 'https://[your\_domain]/api/mte-echo'

Successful response:

{

"echo": true,

"time"[time_stamp]

}

Performance Metrics

100 concurrent connections, 5 minute duration, 1kb request and response

| # Requests | Req/second | Median Response Time (ms) | p90 | p99 | |

|---|---|---|---|---|---|

| Control API | 28050 | 94 | 52 | 59 | 87 |

| MTE Relay | 27763 | 92.6 | 62 | 71 | 87 |

| Result | 98.9% | 98.5% | +10ms | +12ms | +0ms |

200 concurrent connections, 5 minute duration, 1kb request and response

| # Requests | Req/second | Median Response Time (ms) | p90 | p99 | |

|---|---|---|---|---|---|

| Control API | 54938 | 183 | 55 | 66 | 110 |

| MTE Relay | 53724 | 179 | 72 | 110 | 190 |

| Result | 97.8% | 97.8% | +17ms | +44ms | +80ms |

250 concurrent connections, 5 minute duration, 1kb request and response

| # Requests | Req/second | Median Response Time (ms) | p90 | p99 | |

|---|---|---|---|---|---|

| Control API | 67966 | 226 | 55 | 72 | 130 |

| MTE Relay | 64465 | 215 | 100 | 170 | 280 |

| Result | 94.8% | 95.1% | +45ms | +98ms | +150ms |

Note: We recommend load-balancing requests between multiple instances of MTE Relay once you reach this volume of traffic. Please monitor your application carefully.

300 concurrent connections, 5 minute duration, 1kb request and response

| # Requests | Req/second | Median Response Time (ms) | p90 | p99 | |

|---|---|---|---|---|---|

| Control API | 80249 | 267.57 | 58 | 92 | 190 |

| MTE Relay | 67696 | 225.74 | 260 | 350 | 450 |

| Result | 84.3% | 84.3% | +202ms | +258ms | +260ms |

Routine Maintenance

Patches/Updates

Updated images are distributed through the marketplace.

Emergency Maintenance

Handling Fault Conditions

Tips for solving error states:

- Review all tips from Trouble Shooting section above.

- Check Logs for more information

- Configuration mismatch

- Double-check environment variables for errors

How to recover the software

The MTE API Relay Container AKS cluster can be relaunched. While current sessions may be affected, the container will seamlessly manage the re-pairing process with the MTE API Relay Upstream Server and the end-user should not be affected.

Support

The Eclypses support center is available to assist with inquiries about our products from 8:00 am to 8:00 pm MST, Monday through Friday, excluding Eclypses holidays. Our committed team of expert developer support representatives handles all incoming questions directed to the following email address: customer_support@eclypses.com